In slit-scan photography, an image is composed by taking slices from pictures belonging to a stack of (temporally) consecutive photographies. In slit-scan video, images are composed in real time by taking sections of other images from a (sometimes evolving) video buffer. The Khronos Projector generates interactive slit-scan video, since the user can choose the exact place in the displaying area where the program is going to seek for pixels in the other images of the video-stack.

Now, this is a “fine-grain” working mode, resulting in each pixel of the image running its own individual clock. It is interesting though to define larger picture elements (pixels) as arbitrary shaped regions composed of many pixels – voronoi cells, or regular grids for instance. “Cellular pixel elements” or “cisels” define rectangular sections in an image having the same temporal coordinate. In coarse-grain mode, each “video-cell” runs its own clock.

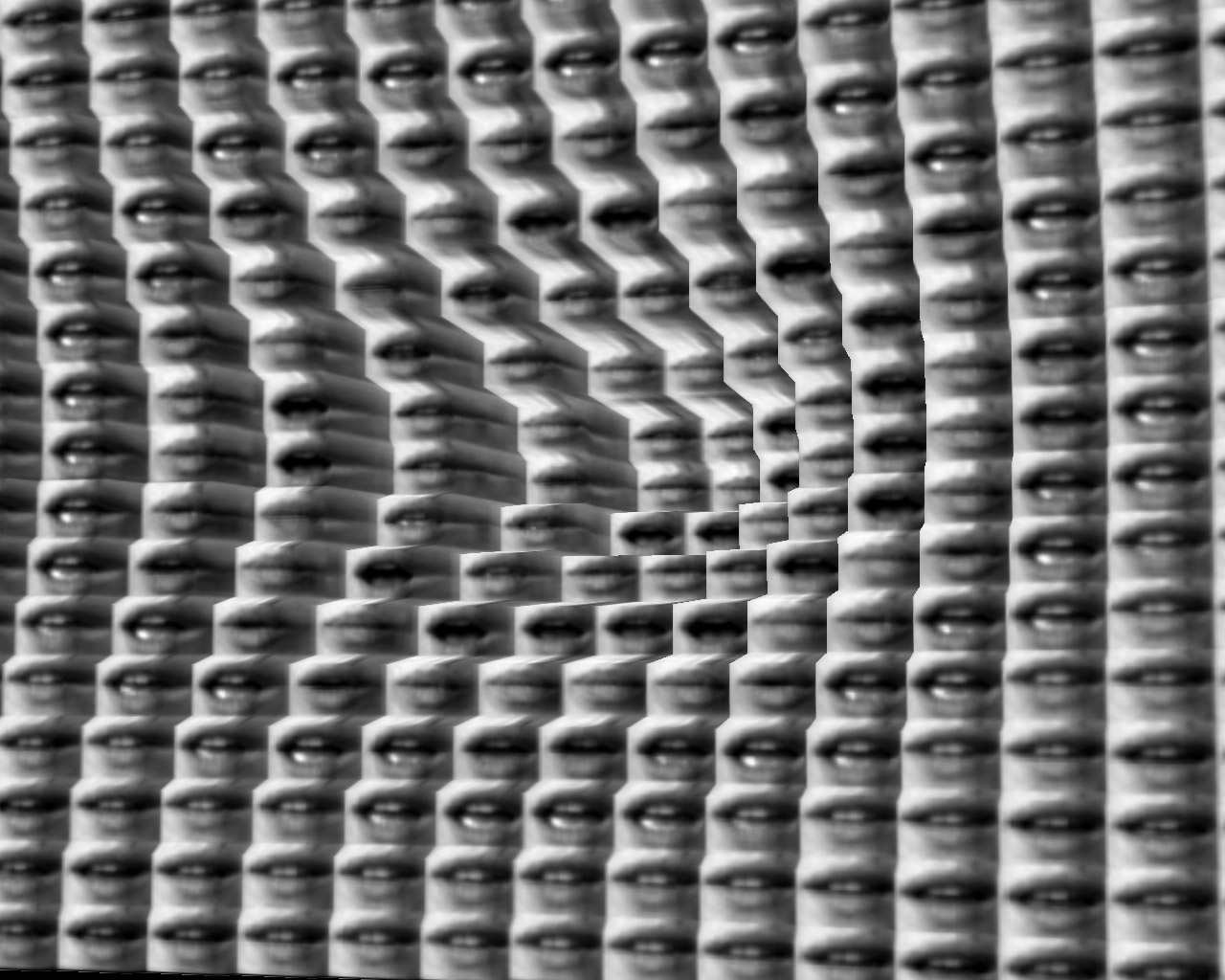

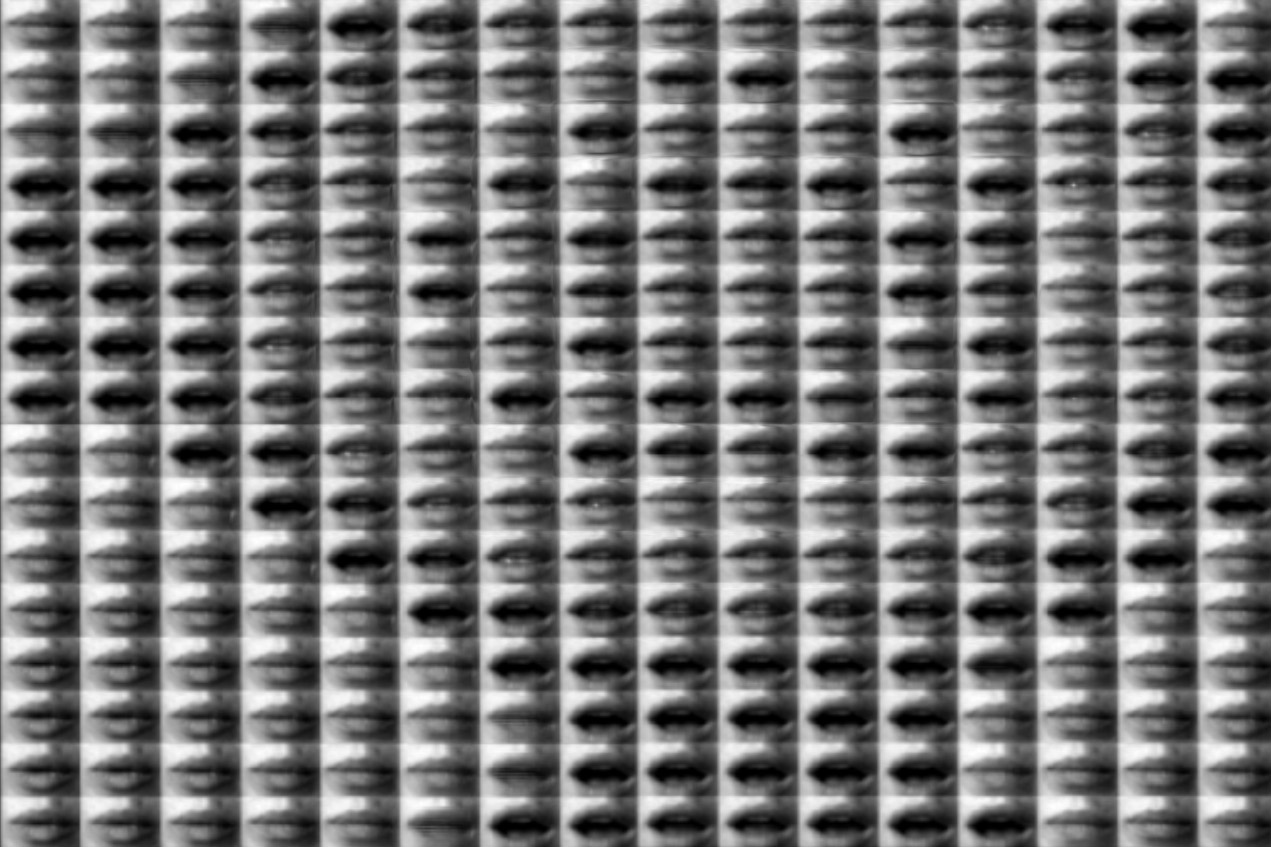

If we replicate the video material so that the size of each resulting “video-cell” exactly matches the position and size of each temporal cell, the outcome looks like a matrix of thousands of randomly delayed TV sets stacked together. Well, not randomly delayed: by waving a hand or touching the tiled display, one can create spatial waves of “time delays” over the video-cells. In the example below, I choose a short video of an speaking mouth (thanks Delphine!). Remember to click on the images to launch video.

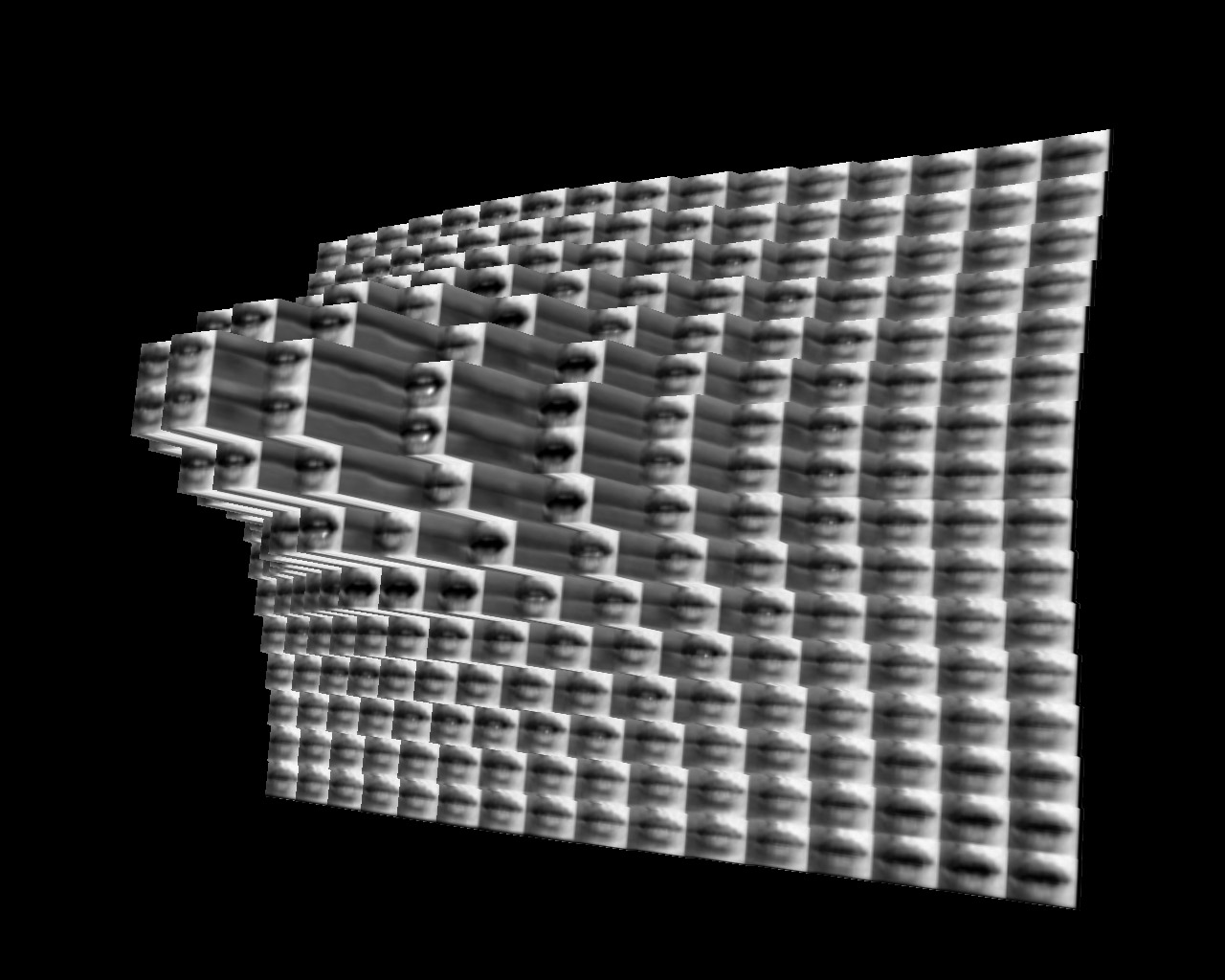

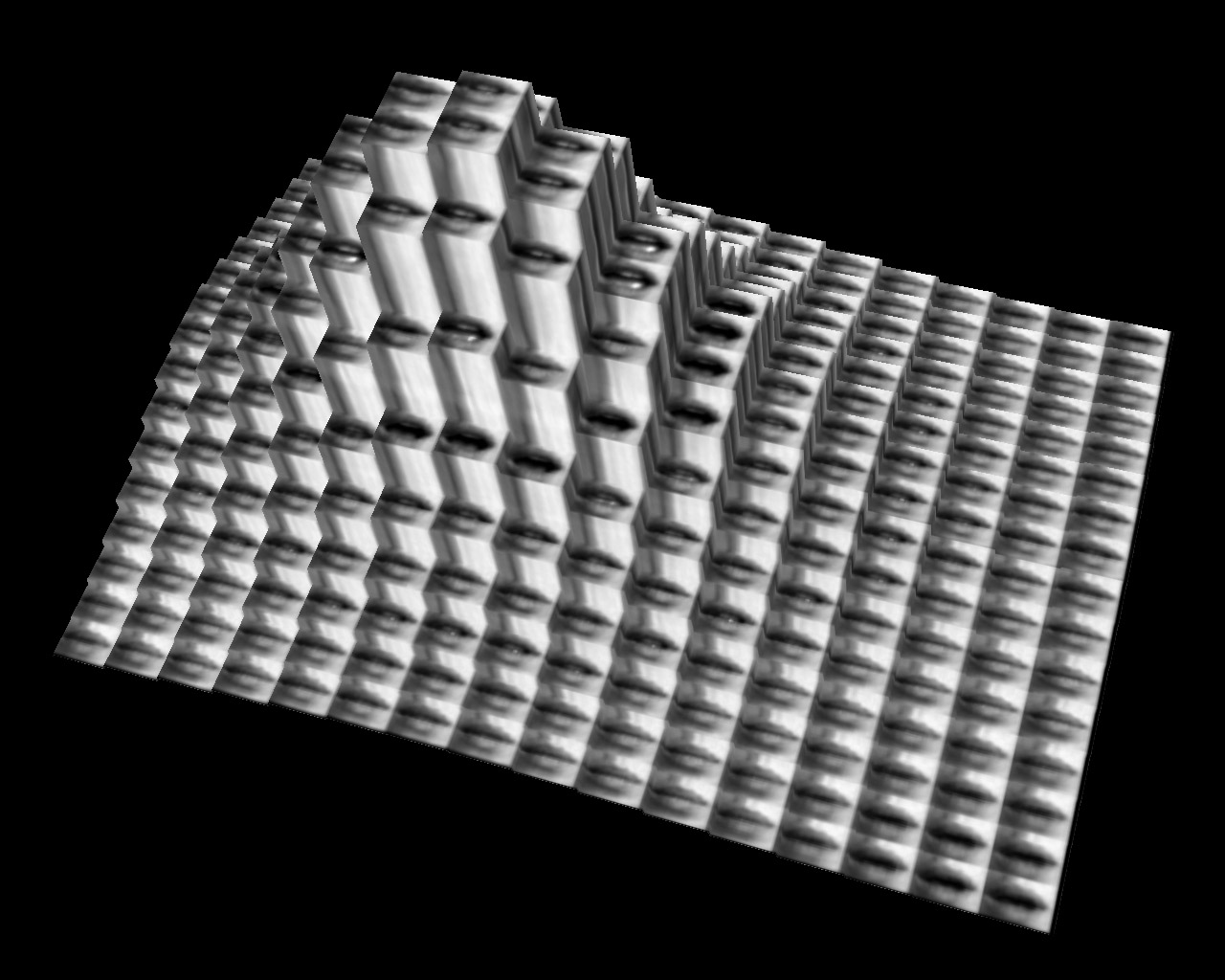

Images below: 2D and 3D snapshots of a two-dimensional chorus of singing mouths (unfortunately audio is lost here). The patterns are controlled by the waving of the hand over the canvas, just like with the Khronos Projector. (Click on the images to launch video!). The existence of inter-frame contrast in each video-cell enables the formation of macro-patterns (this principle is behind the amazing mechanical “video mirrors” by Daniel Rozin).

Other ideas worth exploring

- If the video is properly edited, the time coordinate of each video-cell (i.e., the heigth of the columns in the 3D view) can be used right away to control hundreds of independent sound sources through MIDI (organ pipes? a mouth-organ?). The sound parameters can be related (for example) to the relative opening of each mouth.

- Spatialized sound: put speakers behind the projection screen (one right behind each mouth) so that the “spatial wave of gestures” translates onto a real wave of spatialized sound.

- The Tourretic/schizofrenic array of million squeaking voices : More interestingly, we can have these mouths say something more or less meaningful, like short sentences or just utter particular words at particular moments in the video time line (soothing words, invectivating words, etc). The piece will then produce spatially travelling wavefronts of synchronized speaking mouths, propagating mantra-like conquering words over the empty space of a large wall – or just sound like a pool full of croaking frogs.

- Fractal gesture: use other filmed gestures that may be appropriate for forming macro-images (high contrast between postures?), while at the same time each gesture inside the video-cell will remain interpretable. For instance: eyes opening and closing. Which brings me to a crazy idea: represent an eye using small eyes as pixels, and so on. Produce an infinite zooming movie where there is no “initial” element, but the image of the eye is just a structure, and arrangement (of other smaller structures), at any zooming level . Now, I can’t resist the disgression: perhaps this is a methaphor or the real world – “things” are probably no more than structures embeded on other structures, and so on ad-infinitum. There may be no equivalent thing in the world to a fundamental “pixel” (or particle for that matter).

- Conway’s game of life on a coarse grained Khronos: a Conway’s “game-of-life” where the state of each cellular automaton is represented by a “state” of the mouth (opened, closed, etc). In fact, it is very simple to do this by just assigning a specific time-coordinate to each automaton state (see image on