An extension of Kyle McDonald “ofxCV” addon, this time capable of calibrating online cameras AND projectors (getting the intrinsics of both, plus the extrinsics as Yml file) in a matter of minutes. It would be possible too to calibrate multiple projector and multiple cameras.

Basically the procedure is broken in three steps:

1) Start by first calibrating the camera, and saving the intrinsics (the program is very similar to that in Kyle McDonald ofxCV: https://github.com/kylemcdonald/ofxCv)

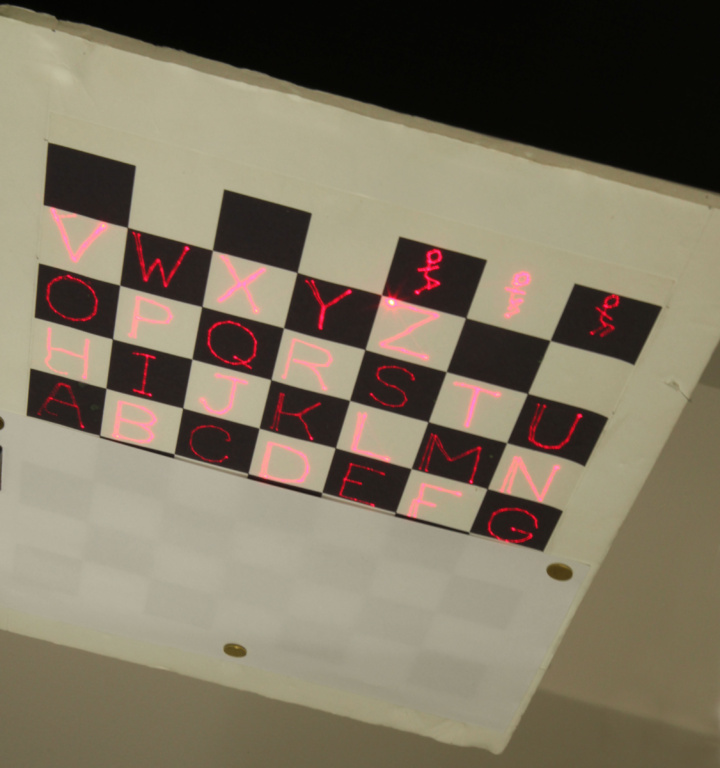

2) Camera/projector calibration starts: the projector projects a grid of circles (first in a fixed position, then as the calibration achieved some accuracy, the grid start following the printed pattern). This step is as follows:

(a) the camera is used to compute the 3d position of the projected circles (by backprojection) in camera coordinate system, and then in “board” coordinate system (or “world coordinates”). This is done in the method “backProject” (method of the class Calibration, but called only when the object is of type “camera”).

(b) This means we can start computing the instrinsics of the projector because you have 3d points (the projected circles) in world coordinates, and their respective “projection” in projector “image” plane.

(c) Finally, we can start computing the extrinsics of the camera-projector (because basically we have 3d points in “world coordinates” (the board), which are the projected circles, and also their projection (2d points) in the camera image and projector “image”). This means you can use the standard stereo calibration routine in openCV (this is done in the function. The reason why we compute FIRST the instrinsics of the projector is because the method stereoCalibrationCameraProjector will call the openCV stereo calibration function using “fixed intrinsics” to ensure better convergence of the algorithm (presumably better because if the camera is very well calibrated first, then the instrinsics of the projector should be good – probably better than if recomputed from all the 3d points and image points in camera and projector).

3) Once we get a good enough reprojection error (for the projector), we can start moving the projected points around so as to better explore the space (and get better and more accurate calibration). In this phase, we can run openCV stereo calibration to obtain the camera-projector extrinsics and again, we don’t need to recompute the instrinics of the projector. After a few cycles (and cleaning of bad “boards”), the process converges, data is saved and you can do AR. There is a lot going on the code to ensure we can do this in real time, and acquire data when the boards are not moving a lot, etc).

I have been working with my colleague Niklas Bergström on an extension of Kyle McDonald “ofxCV” addon, this time capable of calibrating online cameras AND projectors (getting the intrinsics of both, plus the extrinsics as yml file) in a matter of minutes. It would be possible too to calibrate multiple projector and multiple cameras.

Although both camera and projector could be calibrated simultaneously, it is better to start by first calibrating the camera; then, then camera is used to compute the 3d position of projected circles (by backprojection). We can then start calibrating the projector… Once we get a good enough reprojection error (for the projector), we can start moving the projected points around so as to better explore the space (and get better and more accurate calibration). In this phase, we can run openCV stereo calibration to obtain the camera-projector extrinsics. After a few cycles (and cleaning of bad “boards”), the process converges, data is saved and you can do AR (*)

Epurated version by Cyril Diagne here: https://github.com/kikko/ofxCvCameraProjectorCalibration

It has the advantage of working as an “addon” and being compatible with the original ofxCv]

Code on GitHub